I think I promised a general strategy post & a status report, some time ago. Here goes.

Development strategy

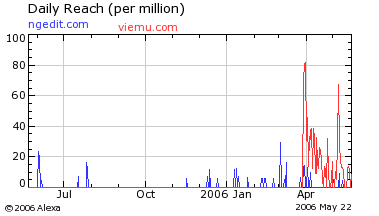

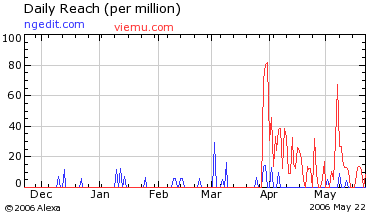

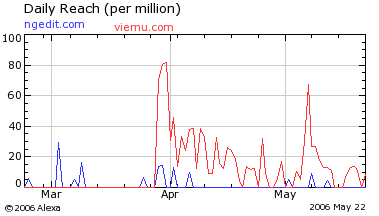

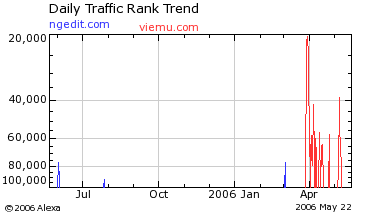

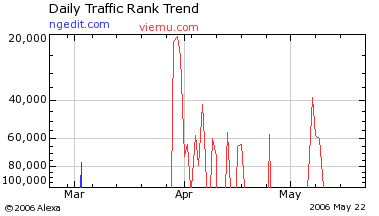

I am currently sharing my efforts between two development efforts. One of them, ViEmu, has been available for almost 8 months now. It has improved, a lot, and sales have been steadily climbing. Although not a stellar success, it’s working well beyond my realistic forecasts (not beyond my wildest dreams), and I’m really happy that I decided to do it.

The second one, code-named NGEDIT, has been in development for a bit over a year, and it’s still not ready for release. In the time I’ve been developing it, both my belief in the concept, and my disrespect for my own time estimations, have grown a lot. I would be very happy to release 1.0 around July or August, one year after the release of ViEmu, but I know it’s still optimistic. And that’s after I’ve decided to cut out most of the stuff for version 1.0!

Of course, apart from these clear-cut fronts, and not including my day job, there are other fronts I have to attend. Customer support, for example, or this blog, for that matter.

I’ll try to summarize, in a general sense, what my current plans for the next few months are. What the main goals are, and how I’m planning to achieve them.

The #1 goal, as you can guess, is to release NGEDIT version 1.0. This is a bit trickier than sounds. The act of releasing it is, in a general sense, more important than the exact functionality it brings. I have come to this conclusion after over a year in development, and the experience of ViEmu. Emotionally, it’s much better to be working on improving an existing product than it is to be working on a product for its first release, with no users or customers. As long as you are not too impatient to get a lot of sales, having actual users & feedback is a big boost for motivation. Having a few sales helps, as well. And, as long as the product is good and there is a need, sales only get higher as you improve the product.

In order to get this process working, I’ve cut out many planned features from 1.0, in order to release it before long. You might ask, why don’t you already release it in its current stage?

A common answer, but not too informative, would be to answer that it’s still too basic, or unusable. Well, not completely true, as I use it. But a better answer would involve some thought on the market I’m getting in. The text editor market is pretty saturated, and most products out there have many man-years of effort built in. There is at least a general perception of things a text editor must have. I think releasing it without these features would be too much of a stretch. Rest assured, I’ve carefully removed everything which isn’t essential for 1.0. As with ViEmu 1.0, the first release will be pretty basic, but it will hopefully be a better tool for at least some people out there, and that should trigger the initial dynamic of usage-feedback-improvement.

Apart from these essential elements, NGEDIT 1.0 will also sport some interesting things that are well outside the minimum requirements list. The very complete vi/vim emulation, for one, or the native management of text in any format (no conversion on load/save). There are a few more, but these are probably the most interesting to talk about. There are two main forces that have resulted in this uncommon feature set. The first is that I’m building NGEDIT 1.0 as the core framework for the really advanced features, which have some unique requirements. And the second is that I’m building it to become my favorite editor first, and only then a commercial product. This results in the need of powerful vi/vim emulation, which is bound not to have much relevance as a commercial feature.

So, we could say the road to NGEDIT 1.0 is drawn by three guiding principles, listed in increasing priority:

- III: Build a good foundation for the future versions of the editor, if not fully realized, at least following a scalable design

- II: Release the minimum product that makes sense

- I: Build my favorite editor

This is not a list of principles I try to adhere to. It’s more of a recollection of the kind of decisions I’ve found myself taking on intuitive grounds. I’ve seen that I will trade the best design for some functionality, in order to be closer to release, and I’ve found that I’ve traded every sensible business principle by deciding to implement some very complete (and costly) vi/vim emulation. The fact that my sticking to vi/vim emulation has resulted in ViEmu, which is a nice product, (kind of) validates the principles. Actually, I think it validates them because I find myself enjoying the effort, which helps in sustaining the long term effort, and the business is gaining momentum. Apart from this, the ViEmu experience has been an incredible sandbox where to learn, and the lessons learned will play a nice role towards the actual release of NGEDIT. For example, the Google SEO front, and also the adwords & clickfraud front.

In a general strategic view, I’m meshing my efforts on NGEDIT 1.0 with steadily improving ViEmu. Even if ViEmu doesn’t have the business potential of NGEDIT, I think that making all the customers of ViEmu happy only helps with the later stages of building the business. One thing to which I haven’t paid too much attention is marketing ViEmu. I think I could easily improve the sales performance of ViEmu with some effort, but I also think this efforts falls on the other side of the line “makes sense over working on NGEDIT”. So far, a bit of Google-tweaking, a bit of adwords, a bit of word-of-mouth, and a deserted market have been successful in building up sales.

This is very different from what I think I should do if ViEmu were the product on which I wanted to base my business. I would have to be working 100% in promoting it while steadily improving it. But, frankly, I don’t think ViEmu would be a sensible sole-business product. Not everyone is dying for vi/vim emulation.

So, what do all the above principles result in, as practical acting? The first point is that, for the past few months, I’ve been (a)improving ViEmu little by little and releasing new versions, (b)designing and working on the core architecture of NGEDIT, and (c)crossporting ViEmu’s vi/vim core to NGEDIT. The reason for the third point was that, upon using NGEDIT myself, I was sorely missing good vi/vim functionality. It already had some nice vi/vim emulation, written in NGEDIT’s own scripting language, which was the seed for ViEmu, but ViEmu had grown way beyond this seed. Thus, principle (I) kicked in, and I started to crossport ViEmu’s vi/vim engine.

Why do I say crossport? The reason is that I have been rewriting the core in such a way that it can be used both within NGEDIT and within ViEmu. This has had some major requirements on the design of ngvi, as I like to call the new core, and it’s a reason it’s taken some serious time to develop. This effort has some nice side effects:

- I now have a super-flexible vi/vim core that I can integrate in other products, or use to develop vi/vim plugins for other environments (ah, if only solving interaction problems with other plugins weren’t the worst part!).

- I can now put in work that benefits both products.

- I’ll talk about it later, but I have come up with some neat new programming tricks due to this effort. The payoff for this will come later on, but it’s there anyway.

The new core is almost finished, with only ex command line emulation left to be crossported. For testing, this core is being used in NGEDIT. That way, ViEmu can advance as a separate branch. As soon as ngvi is finished, I will start implementing ViEmu 2.0 based on ngvi. This new core already brings some functionality that ViEmu is lacking, and I will be just plain happy that most of ViEmu is now officially part of NGEDIT.

And after this, I have a couple major features in NGEDIT that need to be implemented, and a gazillion minor loose ends. If you are an experienced developer, you’ll know it’s those loose ends that put the July/August release date in danger.

Names, names, names

As I mentioned recently, NGEDIT will not be the name of the final product. I already have the candidate for the name, and there’s only one thing pending before it becomes official: I need to check it with a Japanese person. I haven’t been very successful through asking here on the blog, or through asking the Japanese customers of ViEmu. Understandably, I haven’t insisted too much on my Japanese customers – they are customers after all!

I don’t want to reveal the name just yet, as I don’t want even more confusion if it ends up not being the final name. I would also like to have at least a placeholder page ready when I reveal the name.

Apart from this name change, I also intend to do something with the blog’s name. I plan to blog more and more in the future, as the business doesn’t critically require all my energy. I also plan to cover other areas: programming languages, software design, A.I., O.S.S., operating systems, I’d even like to write on things like economy or the psychology of programming! I think a more general name would be a good idea.

Given that the new editor will have its own new name, that I plan to move ViEmu to is own domain (viemu.com, already up with a simple page), and that the blog needs another name, ngedit.com will very likely end up pretty empty.

All that pagerank accumulated for nothing… sigh! In any case, now should be the best moment to do the deep reforms.

I’ll let you know as these names are ready for general exposure.

Tha blog

If anyone has been reading long enough, you will have probably noticed that I post less often that I used to. The main reason is that development itself already drains most of my available energy. There is not much I can do about that, except wait for days where I have more energy, and wait for the moment when NGEDIT is already released. I will feel much better when NGEDIT is out there, and I think I’ll be able to concentrate better on other things. Having put so much effort so far, and not having it available for download & for sale puts a lot of pressure.

But there are also other reasons. For one, I have many interesting topics I’d like to cover, but which I don’t want to cover just yet. I prefer to wait until I have a working product, before bringing up some of these areas. Should be better business-wise.

This ends up meaning that I don’t want to write about the stuff I want to write about. Ahem.

Anyway, I have come up with an area I’d like to cover with a series of posts. It’s about the techniques I have been using for the development of ngvi, which could be described as the application of dynamic & functional programming to C++. Part of the techniques will be applicable to C++ only, but many other apply to general imperative/OO programming. Hopefully it will be interesting to (some of) you.